Local GenAI Code Completion With Context

A couple weeks ago, news about a new large-language model (LLM), named DeepSeek-R1, came out. The publisher, DeepSeek, is a Chinese company that was founded in 2023 and focuses on AI research and development. In response to this, I decided to undertake a small project to try to implement LLM and RAG in a simple context-aware code-completion utility.

Some of the promises/claims about this new LLM on its release included the following:

- It is open-sourced and released under the terms of the MIT License

- It is available for use as a local model in systems like ollama, in addition to a DeepSeek-offered SaaS/PaaS cloud service

- Compared to peer models, it was trained using a lot of lower-cost (and lower power) hardware

- Its performance has been alleged to be on-par with larger-sized models

- It remains performant when used even on some mid-range and lower-end hardware

As an advocate of open-source, the openness of the model, as well as the performance claims, piqued my interest. I had been curious about the Generative AI space for a few years, and had even piloted GitHub Copilot when I worked for Vector0, finding it to offer a decent amount of programming-assisting benefits using the popular Vim plugin.

The below blog entry contains some code and docker compose templates. These are also available on my GitHub account:

Disclaimer Regarding Cloud-Hosted AI and Security

One of the common criticisms of cloud-hosted AI assistant services, such as Copilot, is the data collection potential and privacy ramifications of the approach. Many companies have established policies that take a “deny by default“ approach to Generative AI services. Consequently, many employees often unknowingly or intentionally violate these same policies every day. A lot of this is further driven by product marketing departments, which often go so far as to collaborate with many social media content creators to promote cloud-hosted third-party generative AI products that offer to organize one’s calendar, listen/watch and take notes during meetings, and even rewrite company emails for you. Much of this collaborative advertising with social media content creators presents the employee’s (remember, played by an actor on social media) unilateral GenAI tool selection as a normalized behavior of every-day life, ignoring the important detail that in nearly every corporate environment, this decision-making power is the responsibility of the IT policymaking department, who determines what third-party services are permitted for employee use.

Here’s one example of this pattern I have started to see proliferate:

In the above video, the speaker not only helps teach the viewer how to use Notion, OpenAI, and Otter, but up-sells the idea that the viewer should use the “free of charge” version, which largely limits the vendor’s liability in the event of a cybersecurity incident, and often means an implied permission grant for the vendor to use whatever content is provided to them for whatever purposes they see fit. It is important to recognize that very few employees are authorized to grant such permission to a third-party for company-private content, such as meetings, schedules, slides, emails, etc. As a general rule, were any regular employee to themselves follow the instructions outlined in the video above, no doubt they will have violated multiple corporate policies about authorized use of IT systems.

Don’t get me wrong, these tools are all really helpful, but remember that if you want to use these to optimize your work (the use-case indicated by the video’s content creator), you need to follow the following processes before you can start using it:

- Reach out to your IT department or IT Compliance / Cyber Security team

- Provide the list of tools you are interested in evaluating (include even the social media content describing them)

- Get explicit written permission to use the tools, and make sure to inquire as to whether the company places limits on their authorized use

- If the employer denies the tools, then that’s the decision you will have to adhere to

Why am I exploring this tangent? Well, to bring it back to the engineering topic, I saw the embrace of open-source here as an opportunity to do some research and development toward a locally-hosted GenAI solution that could potentially be compatible with these entirely reasonable practices.

Docker Compose

For the below examples, I utilize docker compose heavily. It is very useful for managing smaller single-instance service deployments that consist of

multiple separate docker containers that communicate amongst one another.

Installing and setting it up is outside the scope of this post, but there is documentation on the project website for Linux, Windows, and MacOS:

Deploying Ollama and Fetching Models

Though DeepSeek-R1 was a catalyst for my interest to look into this area, this guide is by no means specific to DeepSeek’s models, and should be compatible with any of the Ollama model libraries posted on their online model library:

One thing I learned from exploring the GenAI ecosystem is that package and system dependency issues are common. As I typically do, I have elected to

mitigate these using docker and docker compose to run the software in containers as a managed cluster of services. This allows for expanding the

solution with additional components, that can be started and stopped with the docker compose up, down, retart, and other commands.

To start off, I made the initial docker-compose.yml template, which creates a single service container that runs ollama, exposes access to the device

nodes for my GPU (an AMD Radeon 6800 XT), and uses the container image from the Ollama project built for AMD’s ROCm API. For Nvidia GPUs, there is a

slightly more involved process that involves installing nvidia-ctk. Those instructions and a further explanation of the AMD example below is available

here.

services:

ollama:

# Use the Use the rocm version of the image for AMD GPUs - :latest for non-AMDGPU usage

image: ollama/ollama:rocm

container_name: ollama

ports:

# Expose port 11434 so that plugins like ollama-vim and others can use the service

- "127.0.0.1:11434:11434"

networks:

- ollamanet

devices:

# kfd and dri device access needed for AMDGPU ROCm support to work

- /dev/kfd:/dev/kfd

- /dev/dri:/dev/dri

volumes:

# Mount the current user's ~/.ollama folder to /root/.ollama (change the first half of this to anywhere you prefer)

- ~/.ollama:/root/.ollama

restart: always

# Give an easy name to the virtual network that will be created for integrating the

# ollama and future co-hosted services as the example is built out

networks:

ollamanet:

Note that the volumes section above routes filesystem activity to a specific folder on the host system.

This allows for managing ollama local persistent data, like downloaded models and other data, in a manner

whereby it will not be deleted if the containers get destroyed (and, thus, you won’t lose your downloaded

models).

After the above is created, you should be able to run the following to start the new ollama service:

docker compose up --wait

The following output, or similar, indicates success:

[+] Running 1/1

✔ Container ollama Healthy

To see if the container started properly:

docker compose exec ollama ollama --version

ollama version is 0.5.7-0-ga420a45-dirty

Listing models (should be none after a fresh install) is as easy as:

docker compose exec ollama ollama list

NAME ID SIZE MODIFIED

Next, try pulling a new model (start with the deepseek-r1:7b model):

docker compose exec ollama ollama pull deepseek-r1:7b

Many of the models are multi-GB bundles, so downloads will take time. The output should look something like this, with the bars acting as progress bars as download proceeds:

pulling manifest

pulling 96c415656d37... 100% ▕█████████████████████████████████████████▏ 4.7 GB

pulling 369ca498f347... 100% ▕█████████████████████████████████████████▏ 387 B

pulling 6e4c38e1172f... 100% ▕█████████████████████████████████████████▏ 1.1 KB

pulling f4d24e9138dd... 100% ▕█████████████████████████████████████████▏ 148 B

pulling 40fb844194b2... 100% ▕█████████████████████████████████████████▏ 487 B

verifying sha256 digest

writing manifest

success

Re-running the “list models” command from earlier, the model should be visible:

docker compose exec ollama ollama list

NAME ID SIZE MODIFIED

deepseek-r1:7b 0a8c26691023 4.7 GB 2 minutes ago

Verify that the service is listening on port 11434:

ss -tunl | grep 11434

tcp LISTEN 0 4096 127.0.0.1:11434 0.0.0.0:*

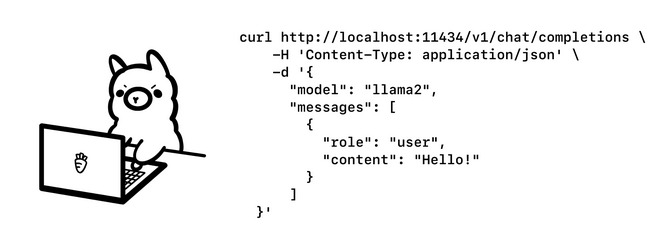

The API exposed by ollama is a JSON-based REST API (documented here).

You can interact with it via any tools typically used for REST API usage. For example, the curl tool can send a prompt to the

API to ask a question of the deepseek-r1:7b model. Piping the output from that to jq allows for a more human-readable response.

curl -X POST -H 'Content-Type: application/json' -d '

{

"model": "deepseek-r1:7b",

"prompt": "Hello, tell me about yourself concisely",

"stream": false

}' http://localhost:11434/api/generate | jq .

When not using a UI, the stream field should be set to false in order to make sure that the response isn’t a real-time stream

of the text being generated. When employed in a UI, there’s a common UX approach whereby the AI responses appear to be “typed” as

the LLM is responding to a prompt, so the API doesn’t wait until a prompt-response is fully formed to begin the HTTP response. Rather,

it streams a sequence of responses in real time as the text is generated to the client. For scripted work, this is both noisy and

additional complexity to parse.

The response:

{

"model": "deepseek-r1:7b",

"created_at": "2025-02-08T21:34:28.029426762Z",

"response": "<think>\nAlright, someone just asked me to tell them about myself in a concise way. I need to figure out the best way to respond.\n\nFirst off, they probably want a quick overview without too much detail. Since I'm an AI, it's important to highlight that I don't have personal experiences or emotions. I should mention my purpose is to assist and provide helpful information or conversation.\n\nI should keep it friendly but professional. Maybe start by saying I'm an AI designed for help. Then explain my limitations in a polite way. Emphasize my goal of being as useful as possible without overstepping.\n\nAlso, adding something about looking forward to assisting them shows willingness to help. Keep the tone positive and open-ended so they feel comfortable asking more questions.\n</think>\n\nI am an artificial intelligence designed to assist with information, answer questions, and provide helpful conversations. My purpose is to support you in a wide range of tasks and inquiries. While I don't have personal experiences or emotions, I aim to be as useful as possible while remaining neutral and non-judgmental. Let me know how I can help!",

"done": true,

"done_reason": "stop",

"context": [

151644,

9707,

....

1492,

0

],

"total_duration": 5524044952,

"load_duration": 2055321942,

"prompt_eval_count": 12,

"prompt_eval_duration": 32000000,

"eval_count": 227,

"eval_duration": 3435000000

}

The response field in the object contains the generated text to display to the submitter of the prompt from the request API call.

This validates that ollama is working properly, listening to the correct TCP port (11434) for API requests, and that it is able to

load and execute models from the Ollama Library.

Feel free to try downloading other models using the above steps as a guide, and test out prompting them via the API. Some models that will be explored later on are:

deepseek-coder-v2:16b(or, simply:deepseek-coder-v2:latest)qwen2.5-coder:14bqwen2.5-coder:7bnomic-embed-text:latest

Installing Open-WebUI AI Workspace Platform

The Open-WebUI project is a web-based AI workspace platform. It allows you to have a nice

user-friendly interface to supported GenAI systems, such as ollama. An example GIF of it in action is below:

So, the next step in the process for improving the utility of our ollama installation is to add a container running open-webui

to the services cluster.

Update the docker-compose.yml file by adding another service named open-webui:

services:

ollama:

# Use the Use the rocm version of the image for AMD GPUs - :latest for non-AMDGPU usage

image: ollama/ollama:rocm

container_name: ollama

ports:

# Expose port 11434 so that plugins like ollama-vim and others can use the service

- "127.0.0.1:11434:11434"

networks:

- ollamanet

devices:

# kfd and dri device access needed for AMDGPU ROCm support to work

- /dev/kfd:/dev/kfd

- /dev/dri:/dev/dri

volumes:

# Mount the current user's ~/.ollama folder to /root/.ollama (change the first half of this to anywhere you prefer)

- ~/.ollama:/root/.ollama

restart: always

open-webui:

image: ghcr.io/open-webui/open-webui:main

container_name: open-webui

ports:

# Expose port 8080 to host port 3000 so that we can access the open-webui from http://localhost:3000

- "127.0.0.1:3000:8080"

environment:

- OLLAMA_BASE_URL=http://ollama:11434

networks:

- ollamanet

volumes:

- ~/.open-webui-data:/app/backend/data

restart: always

depends_on:

- ollama

# Give an easy name to the virtual network that will be created for integrating the

# ollama and future co-hosted services as the example is built out

networks:

ollamanet:

Similar to the ollama service, I use the volumes section to expose a host-filesystem path for the container to store

persistent data even when containers are destroyed and rebuilt.

Once added, the same command can be used to start it - docker compose will intelligently recognize that ollama is already

running and just initialize the new open-webui service. In the above template, the new service is set up to listen on TCP port

3000 on the local system:

docker compose up --wait

[+] Running 2/2

✔ Container ollama Healthy

✔ Container open-webui Healthy

Similarly, we can verify the service is listening on port 3000:

ss -tunl | grep 3000

tcp LISTEN 0 4096 127.0.0.1:3000 0.0.0.0:*

And, finally, using a web browser, you should be able to visit the http://localhost:3000 URL and get the initial splash

page for the Open WebUI service, to allow you to log in. If this is the first time it is run, and the ~/.open-webui-data

directory is empty, then it’ll ask you to create a new admin user the first time it is used.

Once a new user is created, and you’re logged in, you can interact with LLM’s using a user-friendly chat interface, as well

as utilize workspace-management tools to organize your conversations with the LLM. The Open WebUI can also be used to pull

new models into your ollama instance, rather than relying upon the command-line approach used earlier. The Open WebUI

platform provides a large amount of additional integration capability with ollama so more advanced activities, such as

query and retrieval via a knowledge base, tasks that can be implemented with Python code to perform automation actions and

incorporate results into responses, and other features.

Using Python to Prompt AI

Ollama can also be interacted with via Python. For example, the following Python code will send a short prompt to the API, save the response, and display both on STDOUT:

import requests

from pprint import pprint

model = "deepseek-r1:7b"

# Build a JSON prompt to send to the deepseek-r1:7b LLM

data = {

"model": model,

"prompt": "Hello how are you?",

"stream": False,

}

# POST the prompt to ollama

r = requests.post("http://localhost:11434/api/generate", json=data)

try:

# Display the original prompt

print("Prompt:")

print(data["prompt"])

# Display the Response from the AI

print("\nResponse:")

print(r.json()['response'])

except e:

# In the event of an exception, dump the associated error information

pprint(e)

pprint(f"Error: {r.status_code} - {r.text}")

When run (your exact response may vary):

Prompt:

Hello how are you?

Response:

<think>

</think>

Hello! I'm just a virtual assistant, so I don't have feelings, but I'm here and ready

to help you with whatever you need. How are you doing? 😊

Two Models Have a Conversation

Using Python, some more complex implementations are possible. For example, the following Python code can be used to

facilitate a conversation between two different LLMs. The Python script acts as a proxy between them, and logs their

conversation to STDOUT. Note that a new function has been added named remove_think(msg) that removes the contents

of the <think> tags, which are LLM introspectives and wouldn’t be perceived by a normal other party in conversation.

import requests

from pprint import pprint

# To remove the introspective <think>...</think> tags from any response text

# before it is sent to the other model.

def remove_think(msg):

think_open = msg.find("<think>")

while think_open >= 0:

think_close = msg.find("</think>", think_open)

if think_close >= 0:

msg = msg[:think_open] + msg[think_close+8:]

else:

break

return msg

# We will try to have two different models engage in a proxied conversation, where

# this Python code handles proxying the responses from either as prompts for the

# other.

model1 = "deepseek-r1:7b"

model2 = "llama3.1:8b"

# Counter for the number of responses from each model that we want to stop at

count = 10

# Build an initial JSON prompt to bootstrap the conversation

data1 = {

"model": model1,

"prompt": "I need you to role-play with me. Your name is Alan and you are lost in the woods. I want you to introduce yourself to me, and ask me how to escape the woods. Do not explain that you are pretending, talk to me as if you are Alan. Also do not tell me what I would say. I will tell you my own responses.",

"stream": False,

}

while count > 0:

# POST the prompt to ollama using model1

r = requests.post("http://localhost:11434/api/generate", json=data1)

try:

# Display the prompt sent to model1

print("Prompt to Data1:")

print(remove_think(data1["prompt"]))

# Display the Response from model1

print("\nResponse from Data1 to Data2:")

print(r.json()['response'])

# Format a prompt to model2 that uses model1's response as the prompt text

data2 = {

"model": model2,

"prompt": remove_think(r.json()['response']),

"stream": False,

}

# Send the new prompt to model2

r = requests.post("http://localhost:11434/api/generate", json=data2)

# Display the prompt sent to model2

print("Prompt to Data2:")

print(remove_think(data2["prompt"]))

# Display the Response from model2

print("\nResponse from Data2 to Data1:")

print(r.json()['response'])

# Overwrite the prompt to model1 with the response from model2

data1 = {

"model": model1,

"prompt": remove_think(r.json()['response']),

"stream": False,

}

# Decrement the count-down

count -= 1

# Loop

except e:

# In the event of an exception, dump the associated error information

pprint(e)

pprint(f"Error: {r.status_code} - {r.text}")

Try running the above and see what it produces. Try messing with the prompt to overcome some of the limitations in the initial response. If model1 and model2 are swapped, does that significantly change the conversation?

Code Completion

A common use case exists for utilizing LLMs for in-editor source-code auto-completion work. This is a common generative coding solution. Many of the models available on Ollama’s Library can accept specially structured input that indicates your intention to use the response for text completion, rather than conversationally. Unfortunately, the tokens used to indicate this are model-specific and highly variable. In addition, whether the response simply contains completion source code, or completion code plus some additional commentary, varies from model to model as well.

Reading the relevant model documentation on HuggingFace can explain how the input prompt should be formatted for code completion tasks. In some cases, the Ollama library page also documents this:

- https://huggingface.co/QtGroup/CodeLlama-13B-QML#5-run-the-model

- https://huggingface.co/deepseek-ai/DeepSeek-Coder-V2-Instruct#code-completion

- https://github.com/QwenLM/Qwen2.5-Coder?tab=readme-ov-file#3-file-level-code-completion-fill-in-the-middle (followed from model page on HF)

To pick deepseas-coder-v2 (second URL in the list) as an example, the following is an example of how to use its code-completion

syntax:

import requests

from pprint import pprint

# Model to use for code completion (not all models support code completion)

model = "deepseek-coder-v2:latest"

# Model-specific keywords for code completion use cases

# Note that sometimes (deepseek-coder-v2 is an example) these contain extended UTF characters

fim_begin = "<|fim▁begin|>"

fim_cursor = "<|fim▁hole|>"

fim_end = "<|fim▁end|>"

# Build a JSON prompt to send to the deepseek-r1:7b LLM

data = {

"model": model,

# Example prompt pulled from https://huggingface.co/deepseek-ai/DeepSeek-Coder-V2-Instruct#code-insertion

"prompt": f"""{fim_begin}def quick_sort(arr):

if len(arr) <= 1:

return arr

pivot = arr[0]

left = []

right = []

{fim_cursor}

if arr[i] < pivot:

left.append(arr[i])

else:

right.append(arr[i])

return quick_sort(left) + [pivot] + quick_sort(right){fim_end}""",

"stream": False,

# Set raw=True to get raw output without any additional formatting or processing

"raw": True,

}

# POST the prompt to ollama

r = requests.post("http://localhost:11434/api/generate", json=data)

try:

# Display the original prompt

print("Prompt:")

print(data["prompt"])

# Display the Response from the AI (should only be the completion to insert where fim_cursor is)

print("\nResponse:")

print(r.json()['response'])

except Exception as e:

# In the event of an exception, dump the associated error information

pprint(e)

pprint(f"Error: {r.status_code} - {r.text}")

Running this returns the following results:

Prompt:

<|fim▁begin|>def quick_sort(arr):

if len(arr) <= 1:

return arr

pivot = arr[0]

left = []

right = []

<|fim▁hole|>

if arr[i] < pivot:

left.append(arr[i])

else:

right.append(arr[i])

return quick_sort(left) + [pivot] + quick_sort(right)<|fim▁end|>

Response:

for i in range(1, len(arr)):

In this case, the response tells us that the code for i in range(1, len(arr)) should be inserted on the line

right after initially assigning right = [].

Advanced Code Completion

Some models have more advanced code completion and formatting options available. Take the Qwen2.5-Coder model, for instance.

According to its documentation,

the following tokens are supported that map directly to the ones above in deepseas-coder-v2:

<|fim_prefix|>: Token marking that the prompt is a code-completion task<|fim_suffix|>: Token marking the position in the prompt where the code completion insertion should occur (where cursor is in an editor)<|fim_middle|>: Token marking the end of the code-completion prompt

Additionally, the following tokens are supported as well, to help with providing additional context to the input prompt:

<|repo_name|>: Token labeling a repository name for repository code-completion<|file_sep|>: Token marking a file separation point

Installing ChromaDB

Another tool exists which can store and query embeddings. It’s called ChromaDB. This database can take as input a string of text, and then provide snippets from the database that are the closest matches to the input data. This can be very useful for cases where a larger codebase cannot fit entirely within the context window of an LLM. This can help yield context-aware code completion suggestions, in these situations.

We can further expand the docker-compose.yml to deploy a chroma service:

services:

ollama:

image: ollama/ollama:rocm

container_name: ollama

ports:

# Expose port 11434 so that plugins like ollama-vim and others can use the service

- "127.0.0.1:11434:11434"

networks:

- ollamanet

devices:

# kfd and dri device access needed for AMDGPU ROCm support to work

- /dev/kfd:/dev/kfd

- /dev/dri:/dev/dri

volumes:

- ~/.ollama:/root/.ollama

restart: always

open-webui:

image: ghcr.io/open-webui/open-webui:main

container_name: open-webui

ports:

# Expose port 8080 to host port 3000 so that we can access the open-webui from localhost:3000

- "127.0.0.1:3000:8080"

environment:

- OLLAMA_BASE_URL=http://ollama:11434

networks:

- ollamanet

volumes:

- ~/.open-webui-data:/app/backend/data

restart: always

depends_on:

- ollama

chroma:

image: chromadb/chroma:latest

container_name: chroma

environment:

- IS_PERSISTENT=TRUE

ports:

- "127.0.0.1:3001:8000"

networks:

- ollamanet

volumes:

- ./chroma:/chroma/chroma

restart: always

networks:

ollamanet:

Then doing a docker compose up --wait again bringis up the new chroma service, leaving the others in place

and untouched.

Installing Python Packages

The following requirements.txt contains the dependencies I installed for the LangChain examples from here on out:

chromadb

langchain_community

langchain_chroma

langchain_text_splitters

langchain_ollama

Loading Source into Chroma

In order to work with large datasets, such as the source code and API for a codebase and its dependent libraries,

the larger dataset needs to be broken up into a sequence of embeddings, which can then be stored in ChromaDB. We

can use ollama for part of this task as well, along with an LLM that is suited for the task of embedding generation.

Earlier on, the nomic-embed-text:latest LLM was listed as one of the

ones to download.

This should be done now, if it wasn’t already, either through Open WebUI or via the command line via:

docker compose exec ollama ollama pull nomic-embed-text:latest

The following script then can be used to store the desired source code into the ChromaDB embeddings

store. The root_dir variable set in this script can be changed to point to whatever directory contains

the source code desired to be stored for AI retrieval:

#!/usr/bin/env python

import chromadb

from pprint import pprint

from hashlib import sha256

from glob import iglob

# Import LangChain features we will use

from langchain_community.document_loaders.generic import GenericLoader

from langchain_community.document_loaders.parsers import LanguageParser

from langchain_chroma.vectorstores import Chroma

from langchain_ollama import OllamaEmbeddings

# Initialize a connection to ChromaDB to store embeddings

client = chromadb.HttpClient(host='localhost', port='3001')

# Initialize a connection to Ollama to generate embeddings

embed = OllamaEmbeddings(

model='nomic-embed-text:latest',

base_url='http://localhost:11434',

)

# Create a new collection (if it doesn't exist already) and open it

chroma = Chroma(

collection_name='py_collection_test',

client=client,

embedding_function=embed,

)

# Start scanning below a Ghidra installation folder

# TODO: Change this to whatever folder under which you want to store your private code

# for LLM retrieval

root_dir="../../ghidra_11.1.2_PUBLIC"

# Walk the filesystem below root_dir, and pick all *.py files

myglob = iglob("**/*.py",

root_dir=root_dir,

recursive=True)

# Even though the GenericLoader from langchain has a glob-based filesystem

# walking feature, if any of the files cause a parser exception, it will

# pass this exception up to the lazy_load() or load() iterator call, failing

# every subsequent file. Use the Python glob interface to load the files one

# at a time with GenericLoader and LanguageParser, and if any throw an exception,

# discard that one file, and continue on to try the next.

for fsentry in myglob:

try:

# Try creating a new GenericLoader for the file

loader = GenericLoader.from_filesystem(

f"{root_dir}/{fsentry}",

parser=LanguageParser(language='python'),

show_progress=False,

)

# Try loading the file through its parser

for doc in loader.lazy_load():

# Set some useful metadata from the file context

newdoc = {

"page_content": doc.page_content,

"metadata": {

# Store the filename the snippet came from

"source": doc.metadata["source"],

# I'm just using Python as an example, but any supported language will work

"language": 'python',

},

}

# Generate a SHA256 "unique id" for each embedding, to help dedupe

h = sha256(newdoc['page_content'].encode('utf-8')).hexdigest()

# Store the embedding into ChromaDB

chroma.add_documents(documents=[doc], ids=[h])

except Exception as e:

# If the file failed to load and/or parse, then report it to STDOUT

pprint(f"Failed with {fsentry}!")

#pprint(e) # To dump the exception details, if needed

continue

The above code does a few things:

- Creates a new collection in chroma named

py_collection_test- you can choose to isolate your sources into different collections by project or by language or by some other arbitrary criteria, and then limit retrieval to the snippets relevant to your project. - Discovers all of the files under a provided “root” folder matching a file-glob pattern (in this case,

**/*.pywhich is all Python source code stored in a folder or any of its subfolders) - Attempts to load each file via langchain’s

GenericLoaderand parse it with theLanguageParser - If it is successful in parsing, then the generated text embeddings are stored in ChromaDB

While the example above assumes Python, omitting the language= parameter altogether from the LanguageParser instantiation

line will cause it to attempt to detect the language automatically based on the file extension and/or content. LangChain’s

LanguageParser supports a long list of programming languages.

If using the language auto-detection, the language parameter will be added to the ChromaDB metadata automatically, if the

LanguageParser is certain of the source code language.

Additionally, readers familiar with the LangChain toolset might already know that the GenericLoader.from_filesystem helper

function natively supports file-globbing and recursive directory traversal, rather than loading a single file at a time. One of

its limitations that I have run into has been that if any source code files cause loading or parsing errors, then the entire

traversal job will fail and error out. A common issue I keep running into with it is that LanguageParser doesn’t parse extended

UTF-8 characters in source code well, which is a problem where the language (such as Python, Rust, Go, and others) natively

supports any UTF-8 as part of its defined language specification. To overcome this limitation, I have resorted to replicating the

file-glob discovery and directory traversal using the native Python glib.iglob function instead. On each iteration of this, I

call GenericLoader (and then, LanguageParser) individually for each file. If any of their parsing causes an exception, it is

gracefully caught and the offending file is ignored and reported to the user, moving onto the next. In my opinion, this is a

preferable way to handle “defective input data”, as the situation without this ChromaDB is that I cannot benefit from the data

retrieval, any subset of my dataset, especially a 90%-some portion of it, is near-enough to complete that it’ll be reasonably

usable for the context-informed code-completion tasks I wish to perform.

Some further examples and documentation about how this is working is available here:

Querying From ChromaDB

The langchain_chroma library provides a Chroma

interface which has a similarity_search

method that, if given a piece of code (such as the partial user input to complete), will return the top k closest matches from

the ChromaDB.

For example, hypothetically we might have the following partial Python code:

from ghidra.program.model.listing import CodeUnit

from ghidra.program.model.symbol import SourceType

fm = currentProgram.getFunctionManager()

functions = fm.get

This snippet of code has been intentionally built to implement some simple elements of the Ghidra API.

Where the user stopped typing after the fm.get and requested a completion to the code. The entire snippet above could be

stored in a string named code, and then the query would look something like:

r_docs = chroma.similarity_search(code, k=2)

2 matches (defined by k=2) from ChromaDB would be provided to the caller.

An example of using the API to query embeddings from ChromaDB:

#!/usr/bin/env python

import chromadb

import requests

from pprint import pprint

from hashlib import sha256

from langchain_community.document_loaders.generic import GenericLoader

from langchain_community.document_loaders.parsers import LanguageParser

from langchain_chroma.vectorstores import Chroma

from langchain_ollama import OllamaEmbeddings

# Open a connection to the ChromaDB server

client = chromadb.HttpClient(host='localhost', port='3001')

# Connect to the same embedding model that was used to create the

# embeddings in load_chroma.py

embed = OllamaEmbeddings(

model='nomic-embed-text:latest',

base_url='http://localhost:11434',

)

# Open a session to query the py_collection_test collection within Chroma

# this was populated by load_chroma.py

chroma = Chroma(

collection_name='py_collection_test',

client=client,

embedding_function=embed,

)

# An example snippet of Python code that we would like to use to query

# chroma for similarity

code = """from ghidra.program.model.listing import CodeUnit

from ghidra.program.model.symbol import SourceType

fm = currentProgram.getFunctionManager()

functions = fm.get"""

# Perform the similarity search against the chroma database. The k= param

# will control the number of "top results" to return. For this example, we'll

# use 2 of them, but in production more would be better for feeding more

# context to the LLM generating a code completion

r_docs = chroma.similarity_search(code, k=2)

# Iterate across each result from Chroma

for doc in r_docs:

# Display which source file it came from (using the schema created in load_chroma.py)

print('# ' + doc.metadata['source'] + ':')

# Display the embedding snippet content

print(doc.page_content)

Running the above code against my database pre-loaded with the Python code from Ghidra earlier, I got the following 2 snippets in my output:

# ../../ghidra_11.1.2_PUBLIC/Ghidra/Features/Python/ghidra_scripts/AddCommentToProgramScriptPy.py:

## ###

# IP: GHIDRA

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

##

# Adds a comment to a program.

# DISCLAIMER: This is a recreation of a Java Ghidra script for example

# use only. Please run the Java version in a production environment.

#@category Examples.Python

from ghidra.program.model.address.Address import *

from ghidra.program.model.listing.CodeUnit import *

from ghidra.program.model.listing.Listing import *

minAddress = currentProgram.getMinAddress()

listing = currentProgram.getListing()

codeUnit = listing.getCodeUnitAt(minAddress)

codeUnit.setComment(codeUnit.PLATE_COMMENT, "AddCommentToProgramScript - This is an added comment!")

# ../../ghidra_11.1.2_PUBLIC/Ghidra/Features/Python/ghidra_scripts/external_module_callee.py:

## ###

# IP: GHIDRA

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

##

# Example of being imported by a Ghidra Python script/module

# @category: Examples.Python

# The following line will fail if this module is imported from external_module_caller.py,

# because only the script that gets directly launched by Ghidra inherits fields and methods

# from the GhidraScript/FlatProgramAPI.

try:

print currentProgram.getName()

except NameError:

print "Failed to get the program name"

# The Python module that Ghidra directly launches is always called __main__. If we import

# everything from that module, this module will behave as if Ghidra directly launched it.

from __main__ import *

# The below method call should now work

print currentProgram.getName()

As can be seen in the above, there are a lot of comments in the code, particularly license text. It may be desirable to strip this type of content during the ChromaDB loading phase, in order to allow more actual code as context to the LLM prompt, which may assist with completion.

Additionally, the internal API and library code were uploaded into ChromaDB. However, it could be beneficial to also collect a large number of examples to store in ChromaDB, possibly setting this up as a regular job fed by private code repositories worked on by the team.

Combining ChromaDB Query with FIM Code Insertion

When I was looking at the different “coder” LLMs available, I came across the qwen2.5-coder model from

Alibaba (link here), which supports the popular

“fill-in-the-middle” (FIM) protocol, but also has an extension offering a <|file_sep|> tag. The earlier

FIM code can be adapted to take a prompt from a user, and then extend it by adding some additional

code embeddings pulled from ChromaDB, to produce a context-aware code completion utility.

The following Python code is a blend of the earlier FIM code and the more recent ChromaDB query code.

In the version below, the data = data_norag line causes the LLM to be prompted with a query that doesn’t

include any of the results from ChromaDB (they’re simply discarded). This is done for the purpose of

illustrating what the LLM would produce when not context-aware about the Ghidra codebase.

#!/usr/bin/env python

import chromadb

import requests

from pprint import pprint

from hashlib import sha256

from langchain_community.document_loaders.generic import GenericLoader

from langchain_community.document_loaders.parsers import LanguageParser

from langchain_chroma.vectorstores import Chroma

from langchain_ollama import OllamaEmbeddings

# Model to use for code completion (not all models support code completion)

model = "qwen2.5-coder:7b"

# Model-specific keywords for code completion use cases

# Note that sometimes (deepseek-coder-v2 is an example) these contain extended UTF characters

fim_begin = "<|fim_prefix|>"

fim_cursor = "<|fim_suffix|>"

fim_end = "<|fim_middle|>"

file_sep = "<|file_sep|>"

# Open a connection to the ChromaDB server

client = chromadb.HttpClient(host='localhost', port='3001')

# Connect to the same embedding model that was used to create the

# embeddings in load_chroma.py

embed = OllamaEmbeddings(

model='nomic-embed-text:latest',

base_url='http://localhost:11434',

)

# Open a session to query the py_collection_test collection within Chroma

# this was populated by load_chroma.py

chroma = Chroma(

collection_name='py_collection_test',

client=client,

embedding_function=embed,

)

# An example snippet of Python code that we would like to use to query

# chroma for similarity

code = """from ghidra.program.model.listing import CodeUnit

from ghidra.program.model.symbol import SourceType

fm = currentProgram.getFunctionManager()

functions = fm.get"""

# Perform the similarity search against the chroma database. The k= param

# will control the number of "top results" to return. For this example, we'll

# grab 5 of them.

r_docs = chroma.similarity_search(code, k=5)

# First, an example of building the query without including the context from ChromaDB

data_norag = {

"model": model,

"prompt": f"{fim_begin}{code}{fim_cursor}{fim_end}",

"stream": False,

"raw": True,

}

# Then, use the ChromaDB query response as part of the input prompt to the coder LLM

data_rag = {

"model": model,

"prompt": "{fim_begin}{file_sep}\n{context}\n{file_sep}{code}{fim_cursor}{fim_end}".format(

fim_begin=fim_begin, file_sep=file_sep, fim_cursor=fim_cursor, fim_end=fim_end,

context=f"\n{file_sep}".join([doc.page_content for doc in r_docs]),

code=code,

),

"stream": False,

"raw": True,

}

data = data_norag

# POST the request to ollama

r = requests.post("http://localhost:11434/api/generate", json=data)

try:

# Display the prompt, followed by the ollama response

print("Prompt:" + data["prompt"])

print("---------------------------------------------------")

# Denote where the cursor would be using >>> (the Python CLI prompt)

print("Response: >>>" + r.json()['response'])

except Exception as e:

# In the event of an exception, show the details that caused it

pprint(e)

pprint(f"Error: {r.status_code} - {r.text}")

The following output was produced by the above, with the >>> in the Response identifying

where the completion string would start. Note that this is completion performed by the LLM without

the results from ChromaDB.

Prompt:<|fim_prefix|>from ghidra.program.model.listing import CodeUnit

from ghidra.program.model.symbol import SourceType

fm = currentProgram.getFunctionManager()

functions = fm.get<|fim_suffix|><|fim_middle|>

---------------------------------------------------

Response: >>>Functions(True)

for function in functions:

function.setName("NewName", SourceType.USER_DEFINED)

Change the earlier line assigning data = to read:

data = data_rag

Then, run the (modified) script, and the following output was produced:

Prompt:<|fim_prefix|><|file_sep|>

## ###

# ....

#@category Examples.Python

from ghidra.program.model.address.Address import *

from ghidra.program.model.listing.CodeUnit import *

from ghidra.program.model.listing.Listing import *

minAddress = currentProgram.getMinAddress()

listing = currentProgram.getListing()

codeUnit = listing.getCodeUnitAt(minAddress)

codeUnit.setComment(codeUnit.PLATE_COMMENT, "AddCommentToProgramScript - This is an added comment!")

<|file_sep|>## ###

# IP: GHIDRA

# ....

# The following line will fail if this module is imported from external_module_caller.py,

# because only the script that gets directly launched by Ghidra inherits fields and methods

# from the GhidraScript/FlatProgramAPI.

try:

print currentProgram.getName()

except NameError:

print "Failed to get the program name"

# The Python module that Ghidra directly launches is always called __main__. If we import

# everything from that module, this module will behave as if Ghidra directly launched it.

from __main__ import *

# The below method call should now work

print currentProgram.getName()

<|file_sep|>## ###

# IP: GHIDRA

# ....

# @category: BSim.python

import ghidra.app.decompiler.DecompInterface as DecompInterface

import ghidra.app.decompiler.DecompileOptions as DecompileOptions

def processFunction(func):

decompiler = DecompInterface()

try:

options = DecompileOptions()

decompiler.setOptions(options)

decompiler.toggleSyntaxTree(False)

decompiler.setSignatureSettings(0x4d)

if not decompiler.openProgram(currentProgram):

print "Unable to initialize the Decompiler interface!"

print "%s" % decompiler.getLastMessage()

return

language = currentProgram.getLanguage()

sigres = decompiler.debugSignatures(func,10,None)

for i,res in enumerate(sigres):

buf = java.lang.StringBuffer()

sigres.get(i).printRaw(language,buf)

print "%s" % buf.toString()

finally:

decompiler.closeProgram()

decompiler.dispose()

func = currentProgram.getFunctionManager().getFunctionContaining(currentAddress)

if func is None:

print "no function at current address"

else:

processFunction(func)

<|file_sep|>## ###

# IP: GHIDRA

# ....

##

# Prints out all the functions in the program that have a non-zero stack purge size

for func in currentProgram.getFunctionManager().getFunctions(currentProgram.evaluateAddress("0"), 1):

if func.getStackPurgeSize() != 0:

print "Function", func, "at", func.getEntryPoint(), "has nonzero purge size", func.getStackPurgeSize()

<|file_sep|>## ###

# IP: GHIDRA

# ....

##

from ctypes import *

from enum import Enum

from comtypes import IUnknown, COMError

from comtypes.automation import IID, VARIANT

from comtypes.gen import DbgMod

from comtypes.hresult import S_OK, S_FALSE

from pybag.dbgeng import exception

from comtypes.gen.DbgMod import *

from .iiterableconcept import IterableConcept

from .ikeyenumerator import KeyEnumerator

# Code for: class ModelObjectKind(Enum):

# Code for: class ModelObject(object):

<|file_sep|>from ghidra.program.model.listing import CodeUnit

from ghidra.program.model.symbol import SourceType

fm = currentProgram.getFunctionManager()

functions = fm.get<|fim_suffix|><|fim_middle|>

---------------------------------------------------

Response: >>>Functions(True)

for function in functions:

entryPoint = function.getEntryPoint()

codeUnit = listing.getCodeUnitAt(entryPoint)

if isinstance(codeUnit, CodeUnit):

codeUnit.setComment(SourceType.USER_DEFINED, "This is a user-defined comment!")

LLM Response Comparison

Cutting the two responses out of the program output, they can be compared to see what’s going on:

Response: >>>Functions(True)

for function in functions:

function.setName("NewName", SourceType.USER_DEFINED)

Response: >>>Functions(True)

for function in functions:

entryPoint = function.getEntryPoint()

codeUnit = listing.getCodeUnitAt(entryPoint)

if isinstance(codeUnit, CodeUnit):

codeUnit.setComment(SourceType.USER_DEFINED, "This is a user-defined comment!")

As can be seen, the first response (without the added context from ChromaDB) did appear to provide

a simple iteration suggestion that appears to relate to Ghidra, as a SourceType.USER_DEFINED does

exist in Ghidra’s Python API, in addition to a setName call. However, the suggestion is simpler

than the second response. This may be due to the fact that Ghidra itself is a well-known public codebase,

and therefore might have been present in the training data for the LLM.

The second response incorporates more API content from ChromaDB, and it shows, with more details in the suggested completion.

Conclusion

Hopefully the steps detailed above provide some illumination about how LLMs and Vector Stores (like ChromaDB) can be paired to help accomplish Retrieval-Augmented Generation (RAG) tasks. The approach above attempts a fairly naive RAG solution, and more complex approaches to retrieve context for the LLM may exist beyond simply using a similarity search in ChromaDB. The DB offers a number of other similarity algorithms, and as well there are some other non-AI context-aware coding systems, such as python-ctags, which is a popular choice to integrate with code editors on Linux-based systems.

The associated code is available in the following GitHub repository: